Moral Repair:

A Black Exploration of Tech

How can we all thrive as we navigate technology, automation, and AI in the Information Age? What have technologists, philosophers, care practitioners, and theologians learned about the innovations and worldviews shaping a new century of unprecedented tech breakthroughs and social change?

On Moral Repair: A Black Exploration of Tech, hosts Annanda Barclay and Keisha McKenzie talk with tech and spiritual leaders. Their conversations inspire curiosity about tech while showcasing practical wisdom from the African continent and diaspora to nurture wellbeing for all.

Moral Repair expands mainstream tech narratives, celebrates profound insight from Black philosophy and culture, and promotes technology when it serves the common good. Listeners leave each episode with new ways to think about tech’s impacts and apply practical wisdom in their own lives.

In Season 1, episode themes range from recommendation algorithms and a Black ethical standard for evaluating tech to interactive holograms and hip hop as cultural memory tools. Other episodes explore moral repair, ideologies and philosophies shaping Silicon Valley, AI ethics, inclusive design, and tech well-being.

Guests include Aral Balkan (Small Tech Foundation), Dr. Scott Hendrickson (data scientist), the Rev. Dr. Otis Moss III (pastor, filmmaker, storyteller), Stewart Noyce (technologist and marketer), Zuogwi Reeves (minister and scholar), the Rev. Dr. Sakena Young-Scaggs (Stanford University’s Office of Religious & Spiritual Life, Judith Shulevitz (culture critic), and Dr. Damien Williams (professor and researcher on science, technology, and society).

Season 2

Trailer

Season 2

On Moral Repair: A Black Exploration of Tech, hosts Annanda Barclay and Keisha McKenzie talk with tech and wisdom leaders. Their conversations inspire curiosity about tech while showcasing practical wisdom from the African continent and diaspora to nurture wellbeing for all.

Moral Repair expands mainstream tech narratives, celebrates profound insight from Black philosophy and culture, and promotes technology when it serves the common good. Listeners leave each episode with new ways to think about tech’s impacts and apply practical wisdom in their own lives.

-

Annanda: An ancient form of trauma has a new name. It’s called moral injury. And it defines a deep spiritual and existential pain that arises when something violates our core beliefs. In our increasingly connected world, we’re seeing lots of moral injury.

Keisha: Just take our AI age, which often feels like it's doing more harm than good.

OM3: What scares me about technology is the profit motive. profit plus human frailty, historically, has meant tragedy for someone.

Damien: the very people who are supposed to be regulated by these policy changes, are the people who are writing the laws, or the very least “advising” on them.

Adorable: technology it's solving the problems of other technologists and industry, it's not really solving the problems of everyday people.

[SFX: A crescendo in the music]

Keisha: AI is overwhelming.

Annanda: So here's the question. Can we ever truly mend the damage that AI is causing us? That is, what could moral repair in our modern technological era look like?

Keisha: This isn't just about patching systems. It's about caring for people. Technology should serve humanity… not the other way around.

Annanda: We’ve got an antidote to moral injury.

[SFX: A shift in tone]

Jane: Africana philosophy, it's so rich with a broadened conception of technology, it's all about cultivating human well being, cultivating sustainability…

Rev. Dr. SYS: Is this life-giving or death-dealing? Because we need more life-giving things.

[SFX: Our theme song or a play off it leading into it]

Keisha: Welcome to a new season of the two-time AMBIE-nominated podcast Moral Repair: A Black Exploration of Tech. A series about the innovations that make our world… disrupt our societies… and how we can repair the damage.

Annanda: I'm Annanda Barclay, an end of life planner, chaplain, and moral injury researcher. I think a lot about the impact moral injury can have on living a meaningful and dignified life, all the way to the end.

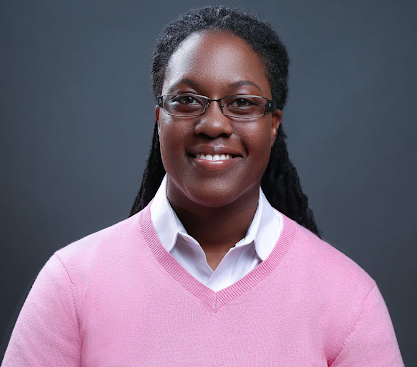

Keisha: And I'm Keisha McKenzie, a technical communicator and narrative strategist with a knack for asking tough questions and making experts accessible.

Annanda: This season, we'll be your guides through the maze of AI. And its use in political strategies, conflicts…

Keisha: Government regulation and play. All while we try to answer the big question — who's actually responsible for the moral repair we so badly need in AI?

Annanda: And how can Africana wisdom guide us towards healing and accountability?

Keisha: Let's take a hard look at the AI that powers and distorts our world.

Annanda: And let's start the process of repair together. Join us for Moral Repair: A Black Exploration of Tech launching April 24th.

Government Regulation: Afrofuturism and Equity in Tech

Season 2 | Episode 1

What do we need to know about recent regulatory guidelines on AI trust and safety? What does one recent federal regulator think still needs attention? How could critical Black digital perspectives reshape the conversation? Annanda and Keisha talk Afrofuturism and equity with Dr. Alondra Nelson, deputy director for science and society at the White House Office of Science and Technology Policy from 2021-2023.

Talk to us online: at Instagram (@moralrepairpodcast), on X (@moralrepair), and on LinkedIn.

The Social Text Afrofuturism issue. About the Black Panther’s clinics.

Nelson + Lander explain the AI Bill of Rights (WIRED)

-

Lead Guest: Alondra Nelson, PhD

EPISODE BIG QUESTION: What does tech policy look like behind the curtain? And how can Afrofuturist and Black cultural principles make that ecosystem work for those who’ve been left behind?

DESCRIPTION: What do we need to know about recent regulatory guidelines on AI trust and safety? What does one recent federal regulator think still needs attention? How could critical Black digital perspectives reshape the conversation? Annanda and Keisha talk Afrofuturism and equity with Dr. Alondra Nelson, deputy director for science and society at the White House Office of Science and Technology Policy from 2021-2023.

[00:00] INTRO

SOUND: curious background music

Annanda: Welcome everybody to our first episode of our second season of Moral Repair: A Black Exploration of Tech!

Ya girls are back at it again! And we won’t stop.

So this season, we’re focusing specifically on AI and technology. We’ll be talking about government, cobalt mining, policing, and believe it or not, the impact big tech has on American farmlands.

Keisha: I’m excited to get into it. We’re talking about how AI shows up in different parts of our lives, how we can use it in positive directions and, where it’s harmful, mitigate that damage.

Annanda: There's a lot that Africana wisdom, Black wisdom, can say about technology: how do we take what AI gives, consider it, and sprinkle some Africana wisdom seasonings on it? That’s the business we’re up to this second season.

Keisha: In these conversations with tech experts, we’ve talked around the role government plays with tech. On today’s show, we’re focusing on the policy environment around new technologies. We ask the big question:

What does tech policy look like behind the curtain? And how can Afrofuturist and Black cultural principles make that ecosystem work for those who’ve been left behind?

Annanda: ‘Cause so many people are left behind.

Keisha: And we can do things differently.

SOUND: title music starts

Annanda: I’m Annanda Barclay…

Keisha: I’m Keisha McKenzie…

Annanda: This is Moral Repair: A Black Exploration of Tech. A show where we explore the social and moral impacts of tech… and share wisdom from Africana culture for caring for what tech has broken.

SEGMENT A: Regulating Emerging Tech (Problems in the policy ecosystem)

Keisha: Annanda, did I ever tell you I worked for Congress once?

Annanda: Nope, I’d remember that. *laughs*

Keisha: Yeah, I interned with committee lawyers in the House of Representatives halfway through grad school. I wanted to learn about government as a technology for managing power and decision making.

SOUND: title music fades out

While I was there, learned a *lot* about the gap between the myths and the realities of government.

That was the first administration of the Obama era.

It was a chaotic time.

Annanda: But—but a historic time nonetheless.

You know, bless you, Keisha. I don't know if I could've had the stamina!

I kind of look at that time as a beginning of a—as a major shift. I mean, I can go back to Bush and Gore, but I went on YouTube and looked at past political debates from when I was a kid, and then from when I was a teenager and then now, and you can see like the disintegration of rhetoric and actually of knowledge of government and how it functions.

It was actually quite frightening to me to see how quickly bipartisanship and actually knowledge of governance has unraveled. And I think about [Gen] Zs, for whom, this has always been their political reality. They have only known a dysfunctional government.

Keisha: Yeah, it’s a maze…

SOUND: Curious sound

So we brought in someone who could guide us through it.

Keisha: Dr. Nelson, thank you for being here.

Alondra: A pleasure to be with you both.

Keisha: Dr. Alondra Nelson was the deputy director for science and society at the Biden-Harris White House Office of Science and Technology Policy (OSTP) from 2021-2023.

Alondra: I have been very forthright in writing about problems of American society and the ways that African American communities in particular have both been innovators and also, struggled very hard, in American society to sort of compel it to live up to its professed ideals—

Keisha: Dr. Nelson told us about how she ended up as the first Black woman to hold her position. It started two presidential terms earlier, with relationships she built while writing a book about the Obama White House.

Alondra: I never thought I would serve in government.

I had been—in—since 2016 working on a book about the White House Office of Science and Technology Policy under the Obama administration. I was very interested in the kind of creative, innovative work that office was doing, under a Black presidency in President Obama's personal interest in science and technology as a policy maker and also as a kind of self identified nerd.

I think when the history books are kind of written about that presidency, That science and technology policy will be a really central part of it. I think it's one of the lesser acknowledged significant things of that administration.

One of President Obama's last foreign trips before he leaves office, he goes to Hiroshima, to the Hiroshima Memorial Park…

Audio clip: Obama at Hiroshima (C-SPAN) - (The actual line Alondra referenced: (7:05-7:27”) “Technological progress without equivalent progress in human institutions can doom us. The Scientific Revolution that led to the splitting of an atom requires a moral revolution as well.”

Keisha: This idea — of parallel technological and ethical revolutions — it’s what was behind Obama forming the President’s Council on Bioethics.

Alondra: You see in the administration this sense that science and technology policy really shouldn't and can't be unmoored from thinking about the historical context and social implications of science and technology.

Annanda: One thing I appreciate about Obama, that man, he could tap into his emotions as a politician to the suffering of people. And I wish I saw more of that in general... I wish that was a standard.

Keisha: Yes, a little emotional awareness and capacity.

Annanda: Yeah, I want a lot, to be honest. *laughs* Not a little. I want—

Keisha: Fair!

Annanda: Not that one is run by their emotions, but to be able to tap into their own humanity and the humanity of others, especially those who are different from them.

Keisha: I listened to the speech and I'm struck by that too. I'm wrestling with whether it did end at the emotional performance of that moment because he went over there for the first time in 70 years. He didn't actually apologize to the people of Japan for the U. S. dropping the atomic bomb, so he was present as the president, and it was extraordinary, and he acknowledged the moral breakdown, and he hugged the survivors. But I'm wondering if there was actual moral repair there.

Is there actual moral repair if you're not apologizing or taking responsibility for it? What do you think?

Annanda: I think that's up for the survivors, those who are morally injured. I think there is moral injury for sure in United States foreign policy. And I wonder what does repair look like for politicians who are in seats of power at times of war, making decisions as big as dropping an atom bomb.

Keisha: Right.

Annanda: The phrase moral injury and moral repair actually does come from the military in the 80s. Because they're trying to figure out what these troops are going through. It's like PTSD, but it's different. They could cure PTSD, but there's this other thing that's like showing up as a comorbidity, and that is moral injury. And the impacts of it are profound.

Keisha: Mmmm. Mm hmm.

So there was a history-making moment with Obama at Hiroshima. And then back home, as Dr. Nelson joined the Biden-Harris administration, they were about to face another.

Alondra: We were at the high water mark of a once in a generation pandemic.

I mean people were just dying. In Brazil, in the African subcontinent, in Harlem, where I live, and the sort of racial demographics of that, the racial inequality of that was just raw and real.

SOUND: conversation heading toward a break

Keisha: Phew: I can't even believe it's been four years since the pandemic started.

Annanda: You can't believe it? Oh, I can. *laughs* Why? Say more.

Keisha: It feels almost like world before, world after. We've collectively gone through multiple layers of moral injury, including abandonment from the people whose roles in the political system is to protect and care for the public.

Alondra: What situation would the United States have to be in for an African American woman who studies health inequality and racial inequality to be invited to be a deputy at the White House Office of Science and Technology Policy?

Keisha: You’ve heard of the “glass ceiling,” the career limits you can see through but not break through. Being promoted into crisis is called the “glass cliff.” And it’s a set up.

Annanda: Being a chaplain in the hospital around that time, that was definitely a cliff. Life is forever changed when you're dealing with six to eight deaths a day on your on-call shift, right? Just death after death after death…

But I actually felt safer in the hospital than any other place. How do you long term manage supporting people in that much grief, bereavement, and death?

Keisha: What I love about what you said was not just to care for others, but also to care for yourself in the midst of caring.

People who are in cliff situations, they don't get that part of the resource. They might get enough to care for others or do the job function, but they usually will not get the stuff that keeps them nourished and able to navigate the change.

Alondra: The economic inequality, the health inequality, the pandemic, were what we were going into office to help mitigate the best we could. I don’t know if it’ll ever happen again, where governments leadership were seeking people who had thought about issues of inequity and innovation, inequality in science and technology. I happened to be… one of those people.

SOUND: break cue

Keisha: Glass cliff or not, Dr. Nelson went into government to help change the climate in tech. After the break, we’ll hear all about that.

BREAK

[9:55] SEGMENT B: How equity improves the process

Annanda: We’re back with Professor Alondra Nelson, former deputy at the White House Office of Science and Technology Policy. We've been thinking about how she got into government and how a moral revolution could help shape tech. But how does influencing government policies really work?

SOUND: break cue ends

Keisha: Going back to what you said about the pandemic or the early part of the pandemic being a once in a century event or experience, when it comes to emerging technologies like machine learning or AI or the mRNA vaccines, who has the greatest influence on what regulations around those things actually look like?

Alondra: The MRNA vaccine is set within the U. S. Food and Drug Administration. But certain things were accelerated. I mean, we did clinical trials very quickly.

We were running them parallel as opposed to waiting for one to finish and another to start so in that case, it was the regulatory authorities that were in place, the usual channels, accelerated. We had something called Operation Warp Speed, and the whole point of that was to do things much quicker than usual.

I think when you talk about things like automated systems and AI gets a little more complicated in part because— and I’ve also worked on human genetics and genomics as an emerging field as well— and when you get the collision or collaboration (pick your C-word!) between market-driven innovation and sometimes really exciting products and possibilities with a PR hype cycle, regulation becomes harder. You know, in the public sphere, people say “Oh government’s so slow and they can’t regulate” and all of that. But also that hype cycles just make it sound like you can’t regulate it.

Like, this new, magical, direct to consumer genomics is coming at you, and we've never seen anything like it in the world. Cut out “genomics” and put in “generative AI,” and it's like, how could you even think you could regulate this? This is like, magic, you know, and it's cool, and there'll be food for everybody, and we'll cure cancer, and everyone's gonna have a house, and the robots will be here, and it'll be awesome!

So, there is this combination of hype cycle and material interests of venture capitalists and companies that make regulation hard, and the hard part is not necessarily that we can't keep up. It's how do you regulate a fever dream? How do you regulate a hype cycle?

That said, it can be regulated!

Annanda: Y’know, when Alondra says, how do you regulate a fever dream? And then she talks about the mRNA vaccine. I remember my beloved gay uncle, Michael, being really freaked out during the pandemic because it reminded him of HIV AIDS.

Keisha: Right.

Annanda: And for those who don't have older gay men as friends, they have survived the AIDS epidemic. we wouldn't have the mRNA vaccine for COVID as fast as we did, had it not been for the years and years and years of HIV AIDS activists who demanded research and a search for a cure in spite of government and in spite of the church and other religious institutions.

Keisha: the AIDS virus and the mRNA vaccine for COVID? How was that connected?

Annanda: Research on HIV/AIDS advanced our understanding of how viruses can manipulate the immune system. The way for our immune systems to attack these unwanted viruses— it's targeting specific viral proteins. So viruses have proteins as a part of their makeup, and mRNA vaccines work by producing a strong immune response to a virus by attacking a specific protein within that virus.

Right?

In the case of COVID the spike protein is a key protein that our immune system must attack for the COVID 19 vaccination to be effective. They took research founded in HIV/AIDS, which showed that to kill this particular virus, you have to target a very specific viral protein. And… it’s from there that the COVID 19 vaccine was able to jump off.

Without gay men, trans folk, queers, lesbians, there would arguably not be a COVID vaccine at the speed the world received it.

Keisha: Right.

Annanda: mRNA vaccines were bought with gay, queer, and black death if we consider the pandemic ongoing in Africa and among African American populations.

And the truth of the matter is, it was and still is.

Keisha: Yes. What we heard from that generation of organizers was not taking the given world for granted. Not treating it as like, It came down from the mountain, like the tablets, and nobody can touch it.

And that's what breaks the fever dream. To say the world as it is, was made, can be remade. We can do something about it. And we're going to do something about it. That's a critical trait we're gonna need to navigate this latest round of “It's magic. It came down from the mountain. It's scary. We can't touch it.” But I think that's the part that Alondra's talking about, that it can be regulated, but we have to take responsibility to do it.

SOUND: shift to prefigure interview audio

When we talk about regulation, it's really important to be able to understand, as you were saying of politicians, how does the system actually work and therefore what is my influence in it? What's my moral responsibility in it?

Like us, Alondra also had to figure out her influence within the system she was embedded in.

Alondra: The President of the United States is the Commander in Chief, the highest in the land

It's a very powerful office, but with regards to legislation, it's a very weak office. The executive branch doesn't make legislation, and it sounds silly to say it, because you're working in the White House,

But you know, people will say, “Why can’t President Biden or Vice President Harris just, like, make the companies stop X, do X, do Y?” And you just can't, it's a free society, so you can't tell companies to do anything. and it is the job of Congress to do that. So the question becomes, in the domain of the powers, soft or otherwise, that the executive branch has, what can you do?

You can convey a vision, you can make a case, and, assert a theory for how things might be done. Everyone could just ignore you. It has no power or anything. And so the Blueprint for an AI Bill of Rights was an attempt to both model what might be done, and also to advance a conversation.

Keisha: Fortunately it was a conversation that had already started. Not just in the US but even in UN agencies and global trade organizations. Leaders were starting to think about “safety in artificial intelligence” and setting common standards for technologies that members of the public would use. And that led to the publication of the White House’s AI Bill of Rights in October ‘22, which Dr. Nelson and her team framed:

Alondra: Part of what we tried to do with the AI Bill of Rights was to say, if systems are supposed to be safe and effective, what processes does one use to guarantee that that's the case?

So it could be organizations that are in charge of protecting consumers can intervene and ensure that AI tools and products, automated systems that are released for consumer use, have sort of met that standard consumer bar.

But you could only imagine doing that if you didn't think that AI was like magic, right? Is ChatGPT a special, nuanced, fascinating consumer product, but a consumer product nevertheless?

Or is it in some other category that we can't even imagine regulating?

Whether or not you're talking about existing algorithmic systems or future ones, they should be safe and effective. They shouldn't violate people's rights. They shouldn't discriminate against people. people should have some sort of alternative or fallback in using the systems. And they should have some expectation of privacy.

All fairly common sense things, but you can really only get there if you anchor in the kind of fundamental rights that people have, regardless of what technology you're talking about, even if you’re talking about quantum computing, it should not be a vector for discrimination in your life.

It might affect discrimination in different ways but the outcome for people's lived experience shouldn't be that they have unsafe systems or you know, lose their privacy, or they're discriminated against by a new or emerging technology.

Keisha: I'm curious about what you were hearing from the public during the year long listening process.

Alondra: We talked a lot to the people. When my appointment was announced I was able to give a short speech, I thank my mom and my daddy and my ancestors and my family, but also said, What are we going to do, effectively, about algorithmic bias?

because that was an issue that people were talking about the film that Joy Bualamwini's involved in, Coded Gaze, had come out. Timnit Gebru, had been fired unceremoniously from Google, for raising issues around generative AI. And so there was already a public conversation happening, and there was a responsibility on the part of folks in government to respond to that.

SOUND: background music

Keisha: Trust in institutions like the government is the lowest it’s been for nearly 70 years. But here Professor Nelson’s describing government actually listening to members of the public.

They’re listening to conversations happening in media, journals, books and industry chats. And they’re also actively soliciting input in some interesting ways, thinking about equity and access as they do it. This reminds me of the call-and-response communication pattern used across the Black diaspora. It’s a way of interacting between speaker and audience that assumes both are an essential part of the experience and both have something valuable to add.

Alondra: The FDA has public listening sessions, anyone can come and speak their peace for two minutes, We did several of those at different times of day, so people could come after work, before work after school…

We had high school students very worried about algorithmic bias and discrimination as we’re putting metal detectors and surveillance cameras and these sorts of things in their schools.

and then we had office hours. So we met with pastors and rabbis, researchers, sometimes industry people. But we try to talk to as many people as possible and to reach them beyond the typical process in Washington, D.C. which is often respond to a request for comment. I imagine in a couple of years, this will be something that we use privacy preserving, generative AI to filter through this kind of data.

Yeah, people do read them and they also become part of the historical record.

Keisha: Not many congressional reps or senators have a science background. And some people say it's probably unlikely we could get lawmakers to know enough about new technology to regulate it quickly in an informed way. What’s your perspective?

Alondra: When the Office of Management and Budget was making initial decisions, about whether government should adopt personal computers, or buy the Microsoft Office Suite We didn’t think you have to have a PhD in computer science to make a policy decision around this.

I don’t want to suggest that artificial intelligence is not a powerful technology. This is a transformative technology for pretty much every facet of life.

At the same time, If we can have more legislators in government, who understand technology and have that background, that is only to the good in the same way as having legislators who have been doctors or nurses or medical professionals, working on healthcare policy or public health policy is only to the good.

But if we think about the AI Bill of Rights, algorithmic systems are supposed to be benefiting and enhancing people's lives, benefiting and enhancing the experience of workers, and they're not doing that, a policymaker at the Department of Labor doesn't need expertise in AI to make that distinction.

Keisha: So the underlying ethic, the agreed core values, become the touchstone for everyone in the system, regardless of role. Values and ethics are how equity and other emerging norms can reshape organizations, industries, and entire countries.

SOUND: background sound ends

Keisha: You've talked a little bit about who's missing from the rooms when tech like AI is developed. Why does it matter if most of the people designing new tech come from the same cluster of schools or share the same social class?

Alondra: Oh, gosh. Because we keep getting it wrong. We keep getting it wrong.

I spent a lot of my career teaching introduction to medical sociology, some history of public health, sociology of health and illness.

And even if we just go back a hundred years and think about some of the early clinical trials or early approvals for pharmaceuticals that were only tested in men, right? and it was assumed that they were just supposed to work on everyone, you know?

So, in some cases, they were only tested on white men.

Ruha Benjamin has examples of this from the design space, where you know, we've designed a chair, we've designed a seatbelt for this car, and it should fit everybody, and it just doesn't.

There's some instances, particularly when drugs were thought to be dangerous, where they might have been tested on veterans or on African Americans, other marginalized communities.

Keisha: Now, this isn’t a “might have been”. This is a “was.” Go look up — Project Operation Whitecoat!

Alondra: we have quite a lot of research that shows us how science and technologies have been drivers of inequality. The Tuskegee Syphilis Study was stood up by the U. S. Public Health Service, a body of the U. S. federal government, and it left, you know, around 300 Black men untreated for syphilis after we had penicillin and knew how to treat it. the legacy of that study has been to build and fester mistrust and distrust of science, of technology, in government, in African American communities, and frankly Black and Brown communities outside of the United States as well.

There’s so much rich research on the impact and legacy of that study, and how it shapes people's help-seeking behaviors. And often, becomes a barrier to people feeling like they have enough trust to get the health care that they need and deserve, or to participate in clinical trials and these sorts of things.

You can't just act like that didn't happen and then say, everybody go get a vaccine. If we're thinking about the pandemic, equitable science and technology policy means a government that's willing to say we have gotten this really wrong, not a boosterism that's like everyone should get a vaccine because the American government is great, go.

Being forthright about those mistakes about those traumas are really important.

And some of these mistakes are so basic, but you don't have anyone in the room who's saying, like, I don't know, that seatbelt doesn't fit me. I'm taller, shorter, bigger, wider. those are just fundamental basic questions.

And then when you have algorithmic systems that are based on, often historical data, or people making choices about data that then get baked in, almost, hardwired as a metaphor, into algorithmic systems, those choices become these path dependencies there's one way that they're going and it sort of narrows the possibility for what the outputs can be. What the, you know, uh, what the answers can be, what the quote unquote predictions can be, if you're talking about a predictive system.

And so it doesn't matter in the sense of get a rainbow coalition in every, Google seminar room and things are going to be better, but it does matter in the design phase of algorithms and the design phase of lots of science and its applications, very basic errors, um, very wrong assumptions, that could certainly benefit from having a broader perspective of people in the room.

And then of course, everyone won't share this, but there's just an equity mandate, that if a company is making tools and resources that are literally going to be used—if you're talking about the big tech companies, Apple, Amazon, Microsoft—by potentially literally everyone in the world, that there should be some obligation for some kind of democratic participation and shaping those outcomes, even if those folks are not stakeholders and don't owe the company, what is an equitable responsibility in relationship to the public and to the consumer?

Keisha: Equity is about defining key populations and getting the data that describe their situation. It’s also about telling the truth about what happened to them, who did it, what gaps resulted, and what it now takes to make up the difference.

Annanda… if we understand equity that way, does that make “moral repair” a kind of equity practice?

Annanda: I think it's a both-and. In order to have moral repair, we first have to name the injury, right? We can't heal what we don't name. there's collective moral repair, and there's individual. And so, when I think about equity, I think we're trying to repair something collectively.

Keisha: Right. Right.

Annanda: And what we know about moral repair is in order for that repair to occur, acknowledgement and hearing of the transgressions have to take place.

SOUND: investigative / curious cue

So people actually do need to be nonjudgmentally heard in their pain, seen and witnessed in their pain which is something that we as a culture and as a country struggle with.

Keisha: Mmm, yes.

Annanda: Dr. Nelson broke down equity in a recent speech at the Federation of American Scientists awards:

CLIP FROM ALONDRA: Audio from Alondra Nelson’s speech at the Federation of American Scientists Awards (2023) (2:10-2:33)

“Equity isn't just who you serve. It's who is at the table when the policies are made. It's that the policies work and proactively uplift all. It's also who has access to the research, the data, the tools, and the resources to pursue the answers we work to ensure greater access to and support within STEM fields for more people and to more immediately open federally funded research to All American taxpayers regardless of their ability to pay.” —Alondra Nelson

SOUND: interview cue

Alondra: We also saw researchers, scientific researchers, talking about the danger of trying to go to their field site during a pandemic. People would be like, “Who is this Black woman with a backpack, out here in the woods where we're, in a lockdown, what are you doing out here?”

And we started to hear stories about researchers who didn't feel that they had mobility. That would shape an office [OSTP] that does science and technology policy, but also has responsibility for the research ecosystem.

What was the responsibility of government to help people think about how else they can do field research or building a conversation in the scientific community about the fact that there's not equitable access to field research, right? So you're being asked to publish papers or get tenure but you don't actually have full access to the resources you need to work at the height of your powers as a researcher. A few years ago, I ran an organization called the Social Science Research Council, and we had a grant for people doing international field work.

And we had a very unequal fellowship experience, right, so we had people without caregiving responsibilities who could take the fellowship and use all the money for their field sites and all of that. And then we had a population of fellows who had to find child care, had to find elder care and were trying to make that fellowship money stretch farther.

And then once they got to the field, often faced vulnerability in the field by virtue of their gender or sexual identity. And so those are the things that are part of a conversation about equitable science and technology policy.

Annanda: Repair isn't just “I'm sorry”, it's what structures then need to be put in place to rebuild trust and to build a new sense of hope. Repairing is mending the harm, and creating a new moral covenant or equity agreement that this harm will not be repeated.

Otherwise, if the harm is repeated, you're continuing the pattern of distrust and injury, making repair further difficult, and there is a place of no return.

It's been argued by scholars that chattel slavery was so great that there is a place of no return with that.

the same with Native Americans, there's no way to repair the indigenous genocide—

Keisha: Because it's ongoing.

Annanda: You're not going to be able to repair what was done in the past, but how do you repair how those descendants are treated in the future, right?

Keisha: Yeah, so changing these sorts of patterns of disparate access and not being taken into account requires a systemic response, a different way of investing resources, a different way of taking in information from a population and a different way of designing for solutions to serve them.

And it was at the Social Science Research Council that Dr. Nelson helped to put together a major fellowship and reshaped that model for funding participants that took into account their research, sure, but also their life contexts, the life contexts in which they were doing that research. Were they parents or elder caregivers? Were they experiencing strain? Where? And how can the resource then serve those conditions?

Annanda: I look at the United States and your medical care is not guaranteed.

Keisha: Nope, it's tied to your job. So if you lose your job, what health insurance?

Annanda: Your income is not guaranteed to be a livable wage. And so you have so many things in this country that are basic safety, security, and I actually think dignity needs that are not guaranteed.

Keisha: Small scale experiments like, universal basic income and or a fellowship that actually treats you like a human being. Those are the kinds of experiments I want to see scaled up socially so that you don't have to be the special person who gets the fellowship and you don't have to be in the right city to have the experiment of universal basic income, but you get to have that not as an exception, but as a norm.

That's what I'm looking for.

Annanda: That would be amazing. The amount of flourishing that would take place because basic needs are met. The amount of crime that would go down, the amount of stress that would decrease, the amount of health and wellness, of innovation and creativity, the amount of kindness in the world. From your lips to God’s ears.

SOUND: interview cue

Alondra: In my time at the White House Office of Science and Technology Policy, we did a kind of vision document on STEM equity and excellence. How do you support the full person?

In postdocs and research lab experiences supporting the full person meant a range of things like how do we need to think about the design of laboratories so that anyone across a spectrum of ability can imagine being a scientist and imagine doing bench research or other kinds of research. So how do you think about universal design?

Are there ways that science and technology research funding agencies and the U.S. federal government can do more to support people who are caregivers, to make sure that they stay in the pipeline because in the bigger context we don’t have enough workers, right?

We need more people working in these fields and sometimes that conversation is an immigration conversation, right? we don't have, enough, scientists coming from abroad, and technologists and engineers, but we are also not doing all that we can to support people here in the United States, whether or not they immigrated, last year or 100 years ago or were brought here during the slave trade, to do the work that they want to do in science and technology.

The ecosystem approach is about supporting the full person and also about trying to strategically and intentionally link up all of the programs. So there's the, y’know, after school program, and there's the community college program, and the worker training program, and everybody's competing for funding and doing their own thing.

SOUND: break cue starts quietly

How do we put all of these programs in an ecosystem so they're like handing the baton to each other, not just competing with each other.

So they're passing the resources and sharing the resources in a way that's accessible. more than the sum of their parts.

Keisha: From ecosystem equity to Afrofuturism and AI: more, after the break.

BREAK

[34:04] SEGMENT C: Afrofuturist and other Black Perspectives on the Problem

SOUND: Break music

Keisha: Welcome back.

We’ve explored how equity approaches can repair the entire tech policy ecosystem from regulation standards and the research environment to opportunities for young people left out of the field. It’s an orientation that, for Dr. Nelson, is rooted in Afrofuturist perspectives.

SOUND: Break music ends

Afrofuturism is a movement that blends art, philosophy, technology, and activism to address black life and liberation.

It assumes Black people are thriving in the future—not invisible or missing like in The Jetsons, and not subjugated like in most conventional sci-fi.

Annanda: It's the ultimate form of freedom. I think it's a collective prayer, speaking into being, of Africana Black people all the time of we're here, we will be here, we will thrive here, and us being here isn't an over and against anybody else, right? It's not the supremacist notion of dominance, but it is dignity. It is respect. and care.

Every Afrofuturist thing, Black people are living good, and the societies in which they are a part are thriving. but it's not limited, but those societies, those worlds are thriving.

Keisha: Annanda, remember this?

SOUND: Fade up bars from Baaba Mal’s Wakanda theme a few minutes into the start of Black Panther. E.g. 0:01:12-1:28

Annanda: Oh my gosh, my little diaspora heart!

Keisha: I know! I know. When Black Panther came out in New York, it felt like an earthquake hit. Afrofuturism everywhere. The clothes. The memes. People doing Wakanda Forever gestures all over the place lol…

SOUND: Black Panther theme fades out

Annanda: Oh yeah. I was out here in these Bay Area streets I went to go see it with Black Stanford And, yeah, everybody dressed up in whatever Africana gear that they had it was beautiful,

Keisha: Yes.

Annanda: what got me with Black Panther I was like certain things or ways of being that were just so unapologetic.

Keisha: I saw it in Harlem, the blackest experience I've had seeing a movie. those examples of music culture, and blackness remind me of the power of Afrofuturism to cast a different vision for people, where we belong, we're in the future we get to survive—

And it's full color. it's technology, it's innovation, style…

Annanda: And all those little STEM babies and art babies… could see themselves into the future as well. It was an Africana space: folks from the continent, from the diaspora. It was beautiful.

SOUND: Drumming in the background

Keisha: In 1998, Professor Alondra Nelson — who we’ve been hearing from in this episode — started an international Afrofuturism listserv for scholars, artists, and others. She went on to publish on the movement through the early 2000s, using journal articles and books to break down the Afrofuturist vision of “making the impossible possible” and imagining “race after the internet.”

So it’s not surprising that Professor Nelson told us about the tremendous impact Afrofuturism has on her.

Keisha: How does it shape your work?

Alondra: Oh my gosh, uh, every day in every way. Absolutely it does. I started writing about Afrofuturism in the mid 90s and I think as people take it up and use it and think with it and are empowered by it now as a concept and a movement is a lot more about fashion and music and food, you know, it's this beautiful, aesthetic thing. But for me, it's always been also about science and technology.

So my early work on Afrofuturism tried to have a place for all of those things. It was a community that included Nalo Hopkinson, the Caribbean science fiction writer, and Fatima Tuggar, the Nigerian visual artist, people doing social theory, as well as people who were technologists, and the like.

Keisha: But an Afrofuturist view is quite different than how many of us… including Dr. Nelson — learned Black history and the past—a mix of tragedy and exceptional Great Men.

Alondra: The stories about technology and gender and race that were part of my upbringing were either the Great African-American First who invented the traffic light

Or you know George Washington Carver, “He’s such a genius, he did a hundred things with peanuts, like oh my god.” I’m just a regular, regular person; how do I get involved?

And then you’ve got these stories of science and technology used to expropriate, exploit, traumatize, repress. Afrofuturism, for me, has offered not an apology for any of that repression, um, and the trauma but a way to say that's not the only story, and that even over and against that history, that continues in some instances, that you've had black People just creating beauty and innovating and doing just extraordinary things. I still marvel at the fact that in 1968, black teenagers, 16 year old and 17 year olds in Oakland were like, we're going to do genetic testing and screening.

I mena, people didn't know what genetic testing was. We didn't have universal newborn screening for genetic disease like we do now. It's like very commonplace now. talk about imagining another world. we can't get access to these resources and people like us are dying because of genetic disease. So let's do some tests. Like what?

Keisha: Sidebar—

SOUND: historical sidebar note

Back in the 1960s and 70s, the Black Panther Party started free health clinics that expanded access to disease screenings including for sickle cell trait, which disproportionately impacts Black people. They started this in Oakland, and the clinics spread across the country, eventually influencing the US government to start screening programs it should have offered years before. And the early Panthers were young: many in their late teens and early 20s.

Similarly, African American senior citizens were some of the earliest adopters of direct to consumer genetics. That's not how we tell that story. The history of chattel slavery has meant that Black people very much live in a world in which they're constantly seeking more information about their ancestors, about their past, about their families.

But what that has meant has been, like, tremendous innovation and a kind of courage around new and emerging technology. Afrofuturism has really been the bridge that has allowed me to tell that story. Like, who are the, outré, you know, interesting, brave, ingenious people who are trying to think about science and technology and its implications in new ways?

Keisha: Coming back to the consumer side of gene tech, is there something that you'd want regulators to take a closer look at—

Alondra: What the national security and existential risk concerns in part around generative AI have done is made people more aware of the potential of bioweapons and using these new kinds of technologies to accelerate, the ability of people without a lot of technological or scientific expertise to, potentially, use these tools in malign ways.

And already people can do CRISPR gene editing in their garages.

Keisha: “People can do CRISPR gene editing in their garages”—what?? *laughs*

Annanda: I'm telling you, these Bay Area streets, give some nerds some tools. *laughs* That's all they need.

Alondra: There'd been these dividing lines, like forensic genetics and medical genetics; those are important and legit. And then there's recreational genetics; and no one cares. Part of the through line of The Social Life of DNA is that there might be different use cases for the genetics, but it's the same genetic information.

The genome of a person carries all of this information at once, and we need to be careful.

I've been kind of trying to track the thinking about genomics and AI together. I mean, part of the project of the Human Genome Project has always been um, a big data supercomputing project, and so AI has been involved in some way in genomics research for a long time. It’ll be interesting to see what that means for genetics and genomics research.

President Obama announces the Precision Medicine Initiative, which is this endeavor in the United States to get a million people's DNA in a database for medical research, plans to oversample for underrepresented groups, and to do a lot of work, admirably, around consent and repeat contact with people who are subjects in the study, giving them sort of feedback and the like.

But they announce it in the East Room and President Obama, says something like, “Well, you know, if it's my genome, I like to think that that's mine,” you know, him and his avuncular kind of delivery. And, I think the next day there was a correction in the newspaper: if you go to the hospital and give tissue, it's not yours, actually, it belongs to the hospital. It does not belong to you.

Keisha: Even the President of the United States with all his staff and information resources—even he didn’t know that he wouldn’t own his own DNA if he went to hospital! So how much are regular folks at a disadvantage?

And what about the technologies that are emerging around us right now?

Alondra: I think, if we can not mess it up, that generative AI is really cool.

SOUND SHIFT

I mean these tools in these systems are potentially fun, fascinating, saving time to the extent that people are anticipating that generative AI or some of these other tools might do work we don't want to do, I'm interested and excited about that.

I'm both interested, captivated, and daunted and worried about outer space innovation, about outer space policy. We're like throwing debris up there. There's no laws. Anybody can pretty much shoot up a satellite as long as they have the capacity to do it. You can explore up there. I think the story of technology and science, if we're wise, is the things that excite us always fill us with some trepidation as well, and our job, the job of policy and of imagination, and of good governance, is to try to mitigate the trepidation.

Keisha: Thank you again for your time this afternoon. It's been a delight.

Alondra: Thank you for your work. I've so just been honored to be hailed by you and, um, really appreciated it, enjoyed the conversation.

SOUND SHIFT: silence for closing reflection.

Keisha: Talking to somebody like Dr. Nelson is helpful because it gives you perspective with all the things that go wrong, are there things that maybe are going right?

Annanda: My dad has this saying. He's like, always keep your wits about you. When he's anxious, it means one thing. When he's calm, it means another. But, you know, I think what's come up for me is, is that idea of what does it mean to right size your wits… as it relates to AI?

I think it would be foolish to not have any caution and to have full abandon to the technology. I think there is a level of trust that is required because I think what could come from this can be so beautiful, y’know.

Keisha: I did get my matrilineal ancestry tested recently. The LDS church has a lot of civic records and so I've been poking around those. But for a long time, I was completely resistant for some of the same reasons you said. Um, who are these companies? What will they do with that information? What's the relationship to law enforcement?

And then on the back end, do they actually have the spread of data to interpret it for me? Like, for me and my people?

I'm really so grateful to be around at a time where, one, that thing is possible. It's possible to do that sort of genealogical study. and make it generally accessible to ordinary people who don't know all about DNA and long time and migrations out of Central Africa and things like that. And to present it in a way that helps me feel like, Oh, I now have a sense of my link to lineages 500 to 2000 years ago, which before that test, I did not.

Annanda: There are biomedical startups that are trying to make unique medicines that can be specified just to your DNA. Imagine how helpful that can be with a myriad of diseases. I want the Wakanda version of that.

Keisha: And not the James Bond version.

Annanda: Oh hell no! No.

There are enough incredibly wealthy people that have shown you’re going to be alright if you do right by others. And we don't highlight that enough.

SOUND: theme twinkles

All too common is we think in order to be wealthy, that wealth must come at the cost of dignified living and thriving of other people. And that's just not true.

Yes, that means there will also be a cap on how wealthy one could be because how could anybody have a right-sized relationship with the world if 1%-9% of people are hoarding so much? Like the math don’t math, right?

Keisha: Right.

Annanda: And our relationship with the world could be sustainable if folks who are wealthy honor that they’re part of a greater interdependent whole, and that when that whole thrives, they do too.

Keisha: Yes.

I'm struck by the fact that it is a choice, to go back to that question of magic and responsibility. It is a choice to choose the magic of life-giving ethics and to take in the perspectives of those who are different from you, to fill out your perspective on the world around you.

This whole panic about emerging technologies requires that any of us, any individual of us, remember that we're not here by ourselves and that we depend on the perspectives of those who are different from us to have a grounded reality and to help us design a future that's worth living in.

I end the episode like with a sense it's possible. If you take the Afrofuturist route, it's possible.

SOUND: Closing theme starts

CALL TO ACTION

Annanda: We’re building community with listeners this season. So reach out and talk to us on Instagram—our handle is @moralrepairpodcast. Also catch us on X… formerly Twitter.

We’d love to hear from you!

Keisha: Follow the show on all major audio platforms—Apple, Spotify, Audible, RSS—wherever you like to listen to podcasts.

And please help others find Moral Repair by sharing new episodes and leaving us a review.

CREDITS

Annanda: I’m Annanda Barclay.

Keisha: And I’m Keisha McKenzie.

Annanda: The Moral Repair: A Black Exploration of Tech team is us, Ari Daniel, Emmanuel Desarme, Rye Dorsey, Courtney Fleurantin, and Genevieve Sponsler. The executive producer of PRX Productions is Jocelyn Gonzales.

Keisha: Original music is by Jim Cooper and Infomercial USA. And original cover art is by Randy Price. Our two-time Ambie-nominated podcast is part of the Big Questions Project at PRX Productions, which is supported by the John Templeton Foundation.

[PRX SIGNATURE]

SHOW NOTES

Talk to us online: at Instagram (@moralrepairpodcast), on X (@moralrepair), and on LinkedIn:

The Social Text Afrofuturism issue: https://www.dukeupress.edu/afrofuturism-1

About the Black Panther’s clinics: https://www.blackpast.org/african-american-history/institutions-african-american-history/black-panther-partys-free-medical-clinics-1969-1975/“

No Justice, No Health”: https://link.springer.com/article/10.1007/s12111-019-09450-w

Nelson + Lander explain the AI Bill of Rights (WIRED) https://www.wired.com/story/opinion-bill-of-rights-artificial-intelligence/

How many medical tech advances came from HIV-AIDS research: https://www.princeton.edu/~ota/disk2/1990/9026/902612.PDF

Interaction Question

What piece of emerging technology are you excited about right now?

Political Strategies & AI

Season 2 | Episode 2

It’s a major election year in American politics. This episode explores the big question, how is AI used in American political decision-making? What are the tools out there? How do they impact the political process? While this episode will not be political, it will touch on the evolution of political culture via AI and the impact it has the everyday person. Our episode features special guest, Cyber Security expert Bruce Schneier of the Harvard Kennedy School.

-

The Big Question

This episode explores the big question, how is AI used in American political decision-making? What are the tools out there, how do they impact the political process? While this episode will not be political, it will touch on the evolution of political culture via algorithms, AI and how that impacts the everyday person.

What Utility Does Bruce Serve? Informing us and our audience on the perils and possibilities of AI and Democracy

Intro

Annanda: Hi, Keisha.

Keisha: Hey, Annanda.

Annanda: Do you remember the T, the scandal that was Cambridge Analytica two U. S. presidential election cycles ago?

Keisha: I remember that name and I remember lots of drama on social media, but tell me about it.

Annanda: So long story short, Cambridge Analytica was a political consulting firm known for its use of data analytics and targeted advertising and political campaigns. The company gained notoriety for its role in the 2016 U. S. presidential election and the Brexit referendum, particularly for its controversial methods of harvesting and utilizing personal data from Facebook users without their consent. This data was then analyzed and used to create detailed psychological profiles to deliver hyper targeted political advertisements. In the U. S. 2016 presidential election and the Brexit referendum these tactics were employed to influence voter behavior and public opinion.

Annanda: Listen back to this epic interview with whistle-blower Christopher Wylie, a data analyst formerly employed by Cambridge Analytica interviewed by Carol Cadwalladr of The Guardian.

SOUND: shift to historical cue

[audio (0:28-0:58)]

Christopher Wylie: Throughout history you have examples [00:01:00] of grossly unethical experiments, um

Carol Cadwalladr: And is that what this was?

Christopher Wylie: I think that you know, yes. It was a grossly unethical experiment. Because you are playing with an entire country. The psychology of an entire country without their consent or awareness. And not only are you like playing with the psychology of an entire nation, you’re playing with the psychology of an entire nation, in the context of the democratic process.

SOUND: end historical cue

Annanda: What does that bring up for you?

Keisha: Okay. So I remember. I was still using Facebook back then, but there was a whole scandal about people feeling hyper exposed. They were doing these free quizzes, Farmville or whatever, and surveys. And they were like, who's taking the survey data?

Who are they then selling it to? And why can't I just do a free quiz on [00:02:00] my social media and be left alone? I know a lot of people who got up off of Facebook that year. And then, it was already changing, like the atmosphere of the site. It was less personal and more agitated and aggressive. So yeah, it did start to sour people on the whole social media thing.

the casual connecting internet that we were promised, I think that was the decade we lost it.

Annanda: That makes sense.

2016 was definitely the year that I got off of Facebook and social media. Yeah. And this year is the year that I'm back on because of this podcast and now it's like, oh, you really can't run a business or do anything without it. It's more of a utility for me [00:03:00] now

Keisha: yeah. Yeah, and I feel like 2016, at least in the UK, was the year of Brexit Britain leaving the EU by referendum. Everything up to that, , pulled on the same sorts of strings as the Cambridge Analytica scandal. So lots of,, behind the scenes data manipulation, changing what people saw, reading people's, or trying to read people's emotions.

So yeah. Big season.

Annanda: Big season indeed. It's never all private.

Keisha: No.

Annanda: Yeah.

Keisha: No. Mm hmm.

Annanda: Cambridge Analytica speaks to the [00:04:00] high stakes possibility of collective moral injury, regardless of who's in office and from what political party. The use of technology when it comes to political strategy is not a political question, but an ethical one.

When public ethic is violated, it's likely that moral injury follows. Moral injury occurs because political violations betray our sense of trust in government, directly impacting our lives with outcomes based on decisions that violate our moral ethical consent.

Keisha: Yeah, with the scale at which government can act on people, it doesn't really matter whether you're directly affected, like if you don't use a particular service, or if you didn't submit particular data, it's just like the overall climate around you becomes corroded in some way.

So you have a stake. And so even if you're not the person who's going to get a check because there's a class action suit, but the whole soup that you're in, like we all get to be part of that. [00:05:00]

Annanda: Yeah. So if we take the class action, Keisha. Like, all right, so I'm not gonna get a check because some company did me dirty with mesothelioma, right?

I don't have mesothelioma, but why should I care that a class action lawsuit is happening for folks with mesothelioma? Like, how does that impact me?

Keisha: Well, the hope is that the action for that particular company that did something wrong sends a warning shot to all the companies who think that they could get away with a similar sort of effect.

And so you hope that the specific remedy to the people directly impacted changes the climate around them. Kind of like what you were saying last episode, that it's the people who are directly impacted who shape what a moral repair looks like. The purpose of that moral repair is to change it so that the injury doesn't happen ever again.

And so, when it comes to political issues, people who can vote, they have a direct influence [00:06:00] on the people who are at least in theory—Let me reframe that.

Annanda: I mean, no, no, we have to say we have an impact. Otherwise, there's nothing left, Keisha. There's nothing left in this dumpster fire that is the United States.

Keisha: Okay. Rewind.

Annanda: I need to believe that my vote can light a fire under multiple people's behinds who serve the public.

Keisha: Yeah. Cause that's one of the levers we have and we have to pull all the levers.

Annanda: Yes.

Keisha: So the people who can vote have a particular influence in the system, but even people who can't vote, the non immigrants, the student workers, the people who are on short term labor contracts, are still affected by the outcomes of the system that the voters represent and influence. So I think about these sorts of processes in a wider circle than just who's directly in the target.

The person who might have their information released is one person, yes, but all of us are [00:07:00] implicated because of what can potentially happen to them can happen to us and often does.

Annanda: Got it. So you're talking about accountability and deterrence.

Keisha: Yeah, accountability, deterrence, and then being in solidarity because we're all connected. So like thinking about how we pay attention to the wellbeing of everybody is basically what politics is all about.

Annanda: Hear, hear! I mean, that is the idea of a democracy. Rumor has it.

Keisha: I've heard legends.

Annanda: And myths. Ahh

The goal of any political strategy is to win. And so the questions understandably surface in such a massive global election year. What role does AI play in the political strategy of winning? Where should we focus our concerns? How do we manage our anxieties? And — the big question of this episode is: [00:08:00] What is an ethical win for the public when it comes to technology and political strategy?

I'm Annanda Barclay.

Keisha: I'm Keisha McKenzie.

Annanda: And this is Moral Repair, A Black Exploration of Tech, a show where we explore the social and moral impacts of tech and share wisdom from Africana culture for caring and repairing what tech has broken.

Keisha: When we come back, we'll talk with Bruce Schneier, a fellow and lecturer in public policy at the Harvard Kennedy School and Berkman Klein Center for Internet and Society.

[BREAK]

SEGMENT A

Keisha: Bruce Schneier calls himself a public interest technologist, and he works at the intersection of security, technology, and people.

Annanda: In your last essay on your website, you talk about how the frontier became the slogan of uncontrolled AI. Say more about that. What is, for our listeners, what is the frontier? What are you referring to with that?

Bruce: Frontier is what we [00:09:00] Talk about, when we talk about the newest AI models, so the term you will hear by the researchers, by the VCs, by the companies, are frontier models. These are models on the edge. These are models that are doing the best. These are the ones that cost hundreds of millions of dollars to create, and a lot of Energy.

And it's a complicated metaphor, right? Frontier is the final frontier. Space, Star Trek. It didn't come from the American West and subjugating the native population, which is the legacy of the frontier in this country.

Bruce: And when you peel back the edges of AI, you see a lot of frontier thinking. That's You see a lot of colonization, a lot of, the data is there for the taking, no one has it, we're going to take it.

There's a lot of rule breaking, right? The frontier was all about the rules don't apply, [00:10:00] and we're going to make our own rules. Think of the American West and the cowboy, that metaphor. So, it is complicated. And I worry that we are making some mistakes when we think about. AI, and our rush to create AI.

Keisha: What would we need to do either with AI or something else to avert the worst impacts of degraded quality in public debate?

Bruce: Right now AI is not helping. Right now there is so much AI nonsense being created by sites that realize they can fire the humans. I write the stuff and this isn't new.

And [00:11:00] we have seen AI generated content for years in three areas, in sports, in finance, and in fashion. Those are three areas where it was kind of formulaic, it was stylistic, it didn't take a lot of creativity to write the article. And AI has been writing those articles for a while now, but now they're writing more stuff, and it's not very good.

And the AI dreck is pushing out the quality human stuff. Now this is hard. Some of it is our fault. We as media consumers don't demand quality in our reporting, in what we consume. So we accept. Poor quality things. I think AI has the potential to write very nuanced articles about issues that matter.

I think about local politics again, right? We've had the death of local journalism because of platforms like Facebook, [00:12:00] but now lots of public government meetings at the local level are effectively secret because nobody's there. There is no local reporter covering the school board or the city council.

In a town. Now, AI can fix that, right? AI is actually really good at summarizing stuff. So if the meeting is open and it's recorded. The A. I. Can summarize it and write the article. So I'd rather have a human doing this, but the problem is I don't have a human because we can't afford the human.

We're not. We're not willing to pay the human. Let's say it that way. Of course we can afford it. And in that area, an A. I. Is way better than nothing. It'll provide some accountability for local government.

Annanda: What would seem most urgent, if there is a sense of urgency?

Bruce: Urgent is this election. On a scale of 1 to 10, I'm pretty concerned. About democracy in the United States, I see some very strong authoritarian tendencies. I see a lot of anti democratic ways of thinking. I see a lot of people more concerned with results than the process. Now, the annoying thing about democracy is You got to accept it, even if the vote doesn't go your way, right?

Democracy is really about not picking the winner, but convincing the loser to be okay with having lost. And that sometimes means your side loses. And if you're not okay with that, then you're not really for democracy. See a lot of people in this country, not okay with that. That think the ends justify the means.

And I don't just mean their side. I mean, us too, a little bit, but I do see some very strong anti democratic. ways of thinking, and that gives me [00:14:00] pause. Turns out a lot of democracy is based on the way we do things rather than laws. Hey, it's sort of, just the way we do it, and it turns out if someone just breaks all the norms, there's not a lot that can stop them.

We learned that, and that was a surprise to many of us. So, I don't know. I like to think we are resilient. We were very resilient in 2020. We've been resilient since then, despite the rhetoric overall. Still a lot of places where democracy is not working.

Keisha: We recently spoke to Professor Alondra Nelson, formerly deputy at the Office of Science and Technology Policy. And she said that over a third of the planet is expected to have elections this year. And you said you thought democracy globally is kind of under siege. Can you share what impact you think AI might have on some of these elections and how we think about government by the people? Globally.

Bruce: It's interesting statistic and I've heard [00:15:00] 30, a third. A third I've heard 40% I've heard over half. Kind of depends how you count.

Keisha: Mm-Hmm. .

Bruce: But it is United States and the EU. And it's India. Australia will be early next year, UK early next year. I mean, these are large countries. With strong democratic traditions. And we are worried now about AI and its ability to create fakes. This is a very near term AI risk. This is not AI subjugating humanity. This is not AI taking our jobs. This is AI making better memes.

Keisha: Mm-Hmm,

Bruce: Now that's the thing that the fear is that AI will be used to create fake audios and fake videos.

There was a fake audio in Slovakia's election. A couple of months ago that came out a week before the actual vote that might've had an effect. We don't know. So we [00:16:00] worry that what the Russians did in 2016 with the Internet Research Agency and creating fake ads on Facebook and fake memes on Twitter can be replicated at speed, at scale, at a much lower cost.

SOUND: shift to historical cue

Annanda: What Bruce is referring to is, the Russian government's interference in the U.S. 2016 election through a coordinated campaign that included hacking Democratic party emails and disseminating misinformation across social media platforms. This interference aimed to sow discord, undermine public trust in the democratic process, and skew public opinion in favor of certain political outcomes.

SOUND: end historical cue

Keisha: I read an article that you wrote for the Ash Center back in November about the four dimensions of change.

You said speed, scale, scope, and sophistication in terms of how AI might develop. Can you give us some concrete examples of what you mean by scale versus scope so that people might understand?

Bruce: Yeah, so let's use, let's take like misinformation, like why misinformation might be worse, right? Speed is one reason, right?

AIs can make memes and fake facts and write tweets and Facebook posts so much faster. than humans can. They can operate at a speed that will rival humans. They can do it, at scale, right? They can make not just like one post, but thousands, millions. You can imagine millions of [00:17:00] fake accounts, each tweeting once instead of one account tweeting a million times.

The scale, scope is how broad it is. So like on Facebook, on Twitter and in different languages, optimized for different audiences. And then the sophistication, they might be able to figure out more sophisticated propaganda strategies. Then humans can and to me, that's what I look at when I look at these technologies and specifically when those changes in degree make changes in kind, when it is different that it's not just faster, but it's something else.

Bruce: So it's not just the Russian government with a hundred plus people in a building in St. Petersburg. It's everybody. And the worry is that noise. [00:18:00] We'll drown out the signal. Now it's not just AI. Blaming that on AI, I think is too easy by half. That's a lot of us. That value, the horse race and who's ahead on the polls.

We're having this interview the day after President Biden’s State Of The Union address and everything I'm reading is how good it was, how bad it was, did he make gaffes? Did he sound good? Not a lot about substance, and that's on us, , that is the kind of political reporting we like, and that kind of reporting plays into memes, which plays into AI strength.

So the worry is that AI will make fakes. The fakes will be disseminated. And more importantly, there's something called the Liar's Dividend, where if there's so many fakes out there, if something real happens, you can claim it's a fake. And we have seen this in the United States, right? Trump has said about some audio, that was probably a [00:19:00] deep fake when it actually wasn't, but if things are a deep fake, you now can claim whatever is real is fake.

So it, I don't know if that will have an effect. We don't need AI to create memes to denigrate the other side. There's a video of Nancy Pelosi that was slowed down, made her look drunk. That wasn't a deepfake, that was slowing down a video. Like any 12 year old can do that with tools on their phone.

But it was something that was passed around. So I don't think the deepfakes are going to be that big a thing. I do worry about them close to the election when they can't be debunked. And I worry about that ability to claim something real as fake.

Annanda: What ways do you see, the average voter, being able to not defend themselves, but keep their guard up, a sense of wisdom around how to navigate how technology is being used in the political [00:20:00] process?

Bruce: It's hard. I'm not sure I know an average voter. You might not either. The people we know are hyper aware, maybe hyper political, are not, when I read about the average voter, it's not the kind of people I meet at Harvard, which is very elite. The average voter, near as I can tell, doesn't get a lot of information.

Keisha: Mm hmm.

Bruce: You know, and not only telling them how to be on their guard doesn't help, they don't know they have to be on their guard. They probably don't even know what being on their guard means. It's sort of interesting. I think about the idea that we're going to put labels on memes, whether they're true or not.

It doesn't, to me, that doesn't make a lot of sense because people who would believe the labels don't need the labels. The people who need the labels aren't going to believe them if they're there. And I don't want to live in a world where the [00:21:00] average voter needs to be on their guard.. That seems like a really, not fun place.

I want a world where the average voter is safe, where the average voter gets information that they can use to make a intelligent decision about which candidate best represents their views accurately. and cast their vote without any undue process or intimidation or long lines or anything. That's the world I want.

That you don't have to be a political junkie in order to be, have a political voice. That you could be someone who is just living their life in a democracy. And then you get your ability to have your say. So I don't know. I don't know what to say to the average voter. I guess I'm sorry. I want it to be better.

Keisha: Bruce, you've written about how AI can shape campaign advertising, [00:22:00] communicate with voters, write legislation, distribute propaganda, submit comments.

Can you talk about how AI is showing up in the practice of democracy and campaigns, lawmaking, and regulation?

Bruce: Depends how we do it. So right now we've had examples where they've been rulemaking.

processes and hundreds of thousands, millions of comments was submitted by not an AI, but by a machine, right? By a computer. It wasn't even clever. It was just multiple comments submitted by fake people. So already it's pretty bad.AI as assistive tech, if we do it right, increases democracy.

So if an AI can make you more articulate to your Congressperson, I think that's great. If the AI denies you a voice, that's bad. I think about AIs being used to ease administrative burdens, like helping people fill out government forms. Now, we can do that, and that is possible, [00:23:00] and that will engage people in democracy.

That will help them get the assistance they are legally entitled to. You could also imagine AIs being used to increase the division between the haves and the have nots.And this is the problem with tech, right? The old saying tech is neither good nor bad, nor is it neutral.

But we can design tech to favor democracy. We could design tech to favor the powerful. And these AI models right now, they are very expensive to create, relatively free to use if you're not going to use the best model. , the tech monopolies are right now giving them away. But my guess is that's temporary and they will become cheaper and they will become more available and that people will have personal AI assistance.

Now that can be an incredible boon for equality. Right, to give you an advocate where you [00:24:00] couldn't have had one before. And here I'm thinking about people for whom going to a courthouse or a government building is a burden. They have a job, they have a family, it's not easy to get around, it's always easy to say, go do this if you're a middle class white guy.

And it's harder, the more you deviate from that kind of zero level of ease. AI can make this better. It might not, right? It really depends. But we do have an opportunity here. So I like talking about the benefits of AI for democracy. Because that gives us a chance for having it.